Introduction

Introduction

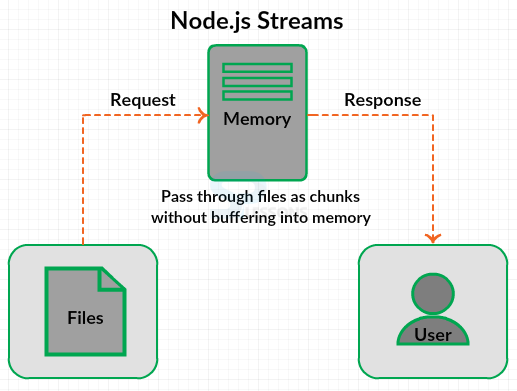

The chapter demonstrates about the Node.js Streams. Node.js is excellent in handling the input/output operations, user can use streams while working on an app with such input/output operations in Node.js. Following are the concepts covered.

- Streams

- Stream Piping

- Stream Chaining

Description

Description

Dealing with Node.js stream is similar to that of actualizing node EventEmitter with some special methods. User can effortlessly read the data from any source and can pipe that data to the required destination by utilizing Node.js Streams. The figure below demonstrate the simple flow of Node.js Streams.

Streams are mainly classified into three types as follows.

- Readable Streams (data can be read).

- Writable streams( data can be written).

- Duplex Streams (which do both reading and writing of data).

- Transform Streams (which transforms or modify the data of duplex stream).

Description

Description

The readable streams in Node.js allows user to read the data from the source, the EventEmitter emits events at different points and this events can be used to while dealing with streams.

Example

Example

In order read data from a stream listen to data event then attach a callback, when the chunk of data present the data event get emitted by readable stream with callback execution. The example code below demonstrate the readable Node.js streams. Before writing the code create a file with preferred message with an extension .txt.

[c]

var fs = require('fs');

var readableStream = fs.createReadStream('output.txt');

var data = '';

readableStream.on('data', function(chunk) {

data+=chunk;

});

readableStream.on('end', function() {

console.log(data);

});

[/c]

Result :

Compile the above code in order to get the output as shown in the below image.

The data can be read from a stream even by calling read() repeatedly on stream till the chunk of data read.

[c]

var fs = require('fs');

var readableStream = fs.createReadStream('file.txt');

var data = '';

var chunk;

readableStream.on('readable', function() {

while ((chunk=readableStream.read()) != null) {

data += chunk;

}

});

readableStream.on('end', function() {

console.log(data)

});

[/c]

Result :

Compile the above code in order to get the output as shown in the below image.

Description

Description

The writable streams in Node.js is used to write the data from the source to a stream, the EventEmitter emits events at different points and this events can be used to while dealing with streams. The writable stream mainly depends on three arguments as follows.

- callback - callback is an argument which don't expect any argument.

- chunk of data - the argument which can be either a buffer or a string.

- encoding - encoding is applicable only for the given strings in the first argument.

Example

Example

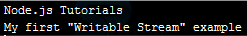

The snippet code below demonstrate the simple writable stream code.

[c]

var fs = require('fs');

var writableStream = fs.createWriteStream('output1.txt');

writableStream.write('Node.js Tutorials\n');

writableStream.write('My first "Writable Stream" example\n');

writableStream.end();

[/c]

By compiling the above code a file output1.txt get created in the directory with the given message defined by

writableStreamm.write() fucntion as shown.

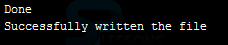

In order to know whether the files have been written or not the emitted events by stream should be given with listeners as shown below.

[c]

var fs = require('fs');

var writableStream = fs.createWriteStream('output1.txt');

writableStream.on('finish', function () {

console.log('Done');

});

writableStream.write('Node.js Tutorials\n');

writableStream.write('My first "Writable Stream" example\n');

writableStream.end(function () {

console.log('Successfully written the file');

});

[/c]

From the above code, the finish event flushes the entire data into system and display a message Compiling the above code will produce the output as shown below.

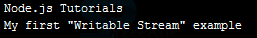

Now, check the file output1.txt file in the directory and the file should be written with the text as shown below.

Description

Description

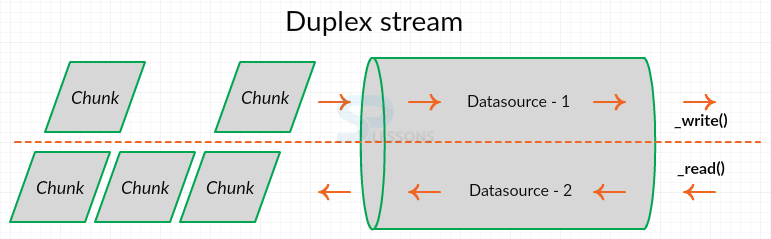

The stream which can perform both read and write streams is known as duplex. The duplex steam embedded with two steams which are independent from each other for flowing in and out. The data can be receive or transmit easily with the help of this two streams. Network socket is the best example of duplex stream. The figure below demonstrates flow of duplex stream.

Example

Example

The example code below explains a duplex stream with the help of two streams which are free from each other. In order to do this use the methods _read() and _write() for receiving and reading data respectively.

[c]

var stream = require('stream');

var util = require('util');

var Duplex = stream.Duplex ||

require('readable-stream').Duplex;

function DRTimeWLog(options) {

if (!(this instanceof DRTimeWLog)) {

return new DRTimeWLog(options);

}

Duplex.call(this, options);

this.readArr = [];

this.timer = setInterval(addTime, 1000, this.readArr);

}

util.inherits(DRTimeWLog, Duplex);

function addTime(readArr) {

readArr.push((new Date()).toString());

}

DRTimeWLog.prototype._read = function readBytes(n) {

var self = this;

while (this.readArr.length) {

var chunk = this.readArr.shift();

if (!self.push(chunk)) {

break;

}

}

if (self.timer) {

setTimeout(readBytes.bind(self), 500, n);

} else {

self.push(null);

}

};

DRTimeWLog.prototype.stopTimer = function () {

if (this.timer) clearInterval(this.timer);

this.timer = null;

};

DRTimeWLog.prototype._write =

function (chunk, enc, cb) {

console.log('write: ', chunk.toString());

cb();

};

var duplex = new DRTimeWLog();

duplex.on('readable', function () {

var chunk;

while (null !== (chunk = duplex.read())) {

console.log('read: ', chunk.toString());

}

});

duplex.write('SPLessons');

duplex.write('Node.js Tutorials');

duplex.write('Node.js Stream');

duplex.write('My first Duplex Stream Example');

duplex.write('Successfully Completed');

duplex.end();

setTimeout(function () {

duplex.stopTimer();

}, 6000);

[/c]

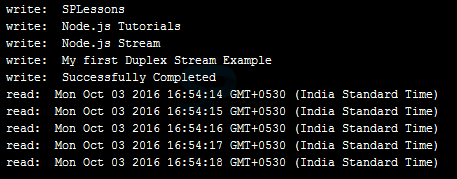

Result

Result

Description

Description

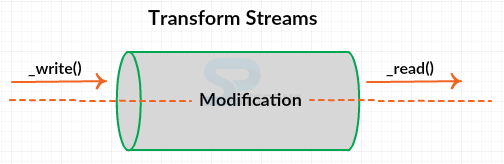

The Transform Streams manipulate the given input data and produce the new output data. The figure below demonstrates the flow of transform streams.

The transform streams are of two types.

- Crypto – used for decryption and encrypting a file.

- Zlib - a file can be compressed and decompressed using zlib.

Example

Example

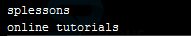

The code below demonstrate the Transform Stream which manipulate the given input i.e if a message input given in lowercase than the output displayed would be the uppercase.

[c]

var stream = require('stream');

var util = require('util');

var Transform = stream.Transform ||

require('readable-stream').Transform;

function Lower(options) {

if (!(this instanceof Lower)) {

return new Lower(options);

}

Transform.call(this, options);

}

util.inherits(Lower, Transform);

Lower.prototype._transform = function (chunk, enc, cb) {

var lowerChunk = chunk.toString().toLowerCase();

this.push(lowerChunk);

cb();

};

var lower = new Lower();

lower.pipe(process.stdout);

lower.write('SPLESSONS\n');

lower.write('ONLINE TUTORIALS');

lower.end();

[/c]

Description

Description

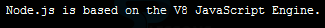

The piping method in stream makes easy for user to read and write the data from the source to preferred location i.e. the data can be read from the source and can be write to destination in order to do this create two .txt file with messages or greetings as shown below.

node1.txt

Node.js is based on the V8 JavaScript Engine.

Now create a .js file with the code as shown below.

[c]

var fs = require('fs');

var readableStream = fs.createReadStream('node1.txt');

var writableStream = fs.createWriteStream('node2.txt');

readableStream.pipe(writableStream);

[/c]

Now check the working directory, a file node2.txt is created with the same message of node1.text as shown in the image below.

Description

Description

The Stream chaining is a method which compress and decompress, there are numerous ways to archive a file but the only easy way is to use chaining and piping, the snippet code demonstrates compressing a file using gzip.

[c]

var fs = require('fs');

var zlib = require('zlib');

var gzip = zlib.createGzip();

var readablestream = fs.createReadStream('output1.txt');

var writablestream = fs.createWriteStream('output1.txt.gz');

readablestream

.pipe(gzip)

.pipe(writablestream)

.on('finish', function () {

console.log('Successfully compressed the file');

});

[/c]

By running the above code will produce the output as shown in the image below.

Now, check the working directory, the file output1.txt get compressed.

Key Points

Key Points

- The Node.js Stream can be writable, readable and both.

- The Node.js stream is an excellent way for data transition from source to destination.

- All Node.js Streams are instances of EventEmitters.